Flying swarms of drones is a very interesting challenge with numerous important applications.

This article explores the practicalities of simulating a swarm in a container environment with successful outcomes, simulation being an essential early step towards a real deployment.

Introduction

The goal is to flexibly operate a multi-drone swarm entirely in software. Flexible here refers to the ability to launch arbitrary numbers of drones—a swarm—with no predetermined configuration. Ground control will pick up and operate any new drones that appear on the network based on MAVLink UDP broadcasting. Conversely, drones can land and disappear from the swarm, say for battery charging or replacement, only to reappear when ready again.

It starts with a worked example: a live swarm sortie simulation.

Worked Example

The rough outline aims to achieve these sub-goals.

- Build and launch a PX4 software-in-the-loop (SITL) container.

- Build and launch a MAVLink routing container.

- Use QGroundControl to co-ordinate a multi-drone sortie.

Docker Client

The groundwork begins with a connection to a Docker engine. The following snippet connects to the local Docker machine on un-encrypted non-TLS port over . Docker Desktop switches this option off by default; switch it on.

library(reticulate)

# For the following to work, the Docker daemon must expose

# port 2375 on the local host.

docker <- import("docker")

docker.client <- docker$DockerClient("tcp://localhost:2375")This worked example utilises R as the coordination layer. Although other alternatives exist, R allows for a succinct functional approach and is well-suited for data-wrangling tasks.

Software in the Loop (SITL)

First, the simulation needs to launch PX4 Autopilot in software simulation mode. Not as easy as it sounds. Building the software is one thing. Launching it is another thing entirely. Operating multiple drones is paramount. The build tools do support multiple simultaneous simulations but not in containers.

Docker container for PX4 SITL

The following Dockerfile applies basic build steps to the PX4-Autopilot source repository using the Jammy-based development container.

FROM px4io/px4-dev-nuttx-jammy

# The simulator runs *without* upgrading Jammy. It does not display the

# graphics, however. Upgrading fixes it.

RUN apt update && apt upgrade -y

RUN git clone -b jmavsim-run-p-instance \

--recurse-submodules https://github.com/royratcliffe/PX4-Autopilot.git

WORKDIR PX4-Autopilot

RUN make px4_sitl build_jmavsim_iris

WORKDIR build/px4_sitl_default/src/modules/simulation/simulator_mavlink

ENTRYPOINT [ "cmake", "-E", "env", "PX4_SYS_AUTOSTART=10017",

"../../../../bin/px4" ]The image entry point launches CMake from the MAVLink simulator directory. This gives the launcher access to PX4’s argument list. Arguments added to the image launcher become arguments added to the PX4 command line.

Launching the PX4 SITL container

By default, the launcher function listed below creates a headless simulation with automatic container removal on exit.

px4.sitl <- \(docker.client,

headless = TRUE,

remove = TRUE,

expose = 0L) {

ports <- list()

ports[[paste0(18570L + expose, "/udp")]] <- 18570L + expose

docker.client$containers$run(

docker.client$images$get(name = "royratcliffe/px4-sitl"),

command = c(

"-d",

"-i", expose),

environment = list(

DISPLAY = ":0",

HEADLESS = as.integer(headless)),

volumes = list(

"/run/desktop/mnt/host/wslg/.X11-unix" = list(

bind = "/tmp/.X11-unix")),

ports = ports,

remove = remove,

detach = TRUE)

}Points to note:

- The ‘command’ key passes

px4arguments:-dfor daemon mode,-ifor the instance. The latter offsets the MAVLink system numbers so that multi-simulation appear as distinct drones rather than spooky replications of the same drone.

MAVLink Routing

Build a container image and then launch it.

Docker container for MAVLink router

The Dockerfile below uses Alpine Linux, ideal for Docker images small deployments due to its small size.

FROM alpine:3 AS builder

RUN apk update && apk add --no-cache \

gcc \

g++ \

git \

pkgconf \

meson \

ninja \

linux-headers \

&& rm -rf /var/cache/apk/*

RUN git clone --recursive \

https://github.com/mavlink-router/mavlink-router \

&& cd mavlink-router \

&& meson setup \

-Dsystemdsystemunitdir=/usr/lib/systemd/system \

--buildtype=release build . \

&& ninja -C build

FROM alpine:3

RUN apk update && apk add --no-cache libstdc++

COPY --from=builder /mavlink-router/build/src/mavlink-routerd \

/usr/local/bin

ENTRYPOINT [ "mavlink-routerd" ]Launching the MAVLink daemon container

Next, define a launcher function for the MAVLink router daemon. Note the UDP broadcast address at in the function below. This feature allows dynamic drone detection. IP address corresponds to the default Docker bridge network.

mavlink.routerd <- \(docker.client, ...,

client.ips = NULL,

client.port = 18570L,

client.ip = "172.17.255.255",

client.ports = NULL,

expose.client = FALSE,

server.ports = 14445L,

server.ip = "0.0.0.0",

remove = TRUE,

expose = 0L) {

udp.names <- \(x) if (!is.null(x)) paste0(x, "/udp")

names(client.ports) <- udp.names(client.ports)

names(server.ports) <- udp.names(server.ports)

clients <- c(

if (!is.null(client.ips)) paste(client.ips, client.port, sep = ":"),

if (!is.null(client.ports)) paste(client.ip, client.ports, sep = ":"))

command <- c(

"-t", 0L,

if (!is.null(clients)) sapply(clients, c, "-e")[2:1, ],

paste(server.ip, server.ports, sep = ":"), ...)

ports <- as.list(c(

# Do *not* expose the client ports as follows.

# MAVLink router will connect by UDP broadcast

# on the 172.17.0.0/16 sub-net.

#

if (expose.client) client.ports + expose,

#

server.ports + expose))

message(paste(command, collapse = " "))

docker.client$containers$run(

docker.client$images$get(name = "royratcliffe/mavlink-routerd"),

command = command,

ports = ports,

remove = remove,

detach = TRUE)

}Important to note that the client ports require exposure otherwise routing fails.

Ground Control to Major Tom

Putting it all together: launch ground control, the router then drone swarm members one by one.

Run QGroundControl. Enable MAVLink forwarding to localhost:14445 in order to relay the ground-station MAVLink heartbeat.

Launch a MAVLink router daemon. Bind to client ports . This allows for up to ten drones in a swarm as a starting point, just because there are ten digits, but no practical limit exists.

mavlink.routerd(docker.client,

client.ports = 18570:18579,

"-e", "192.168.3.133:14550",

"-v")Next launch a drone simulation. Make it non-headless meaning that it will display a 3D projection of the drone.

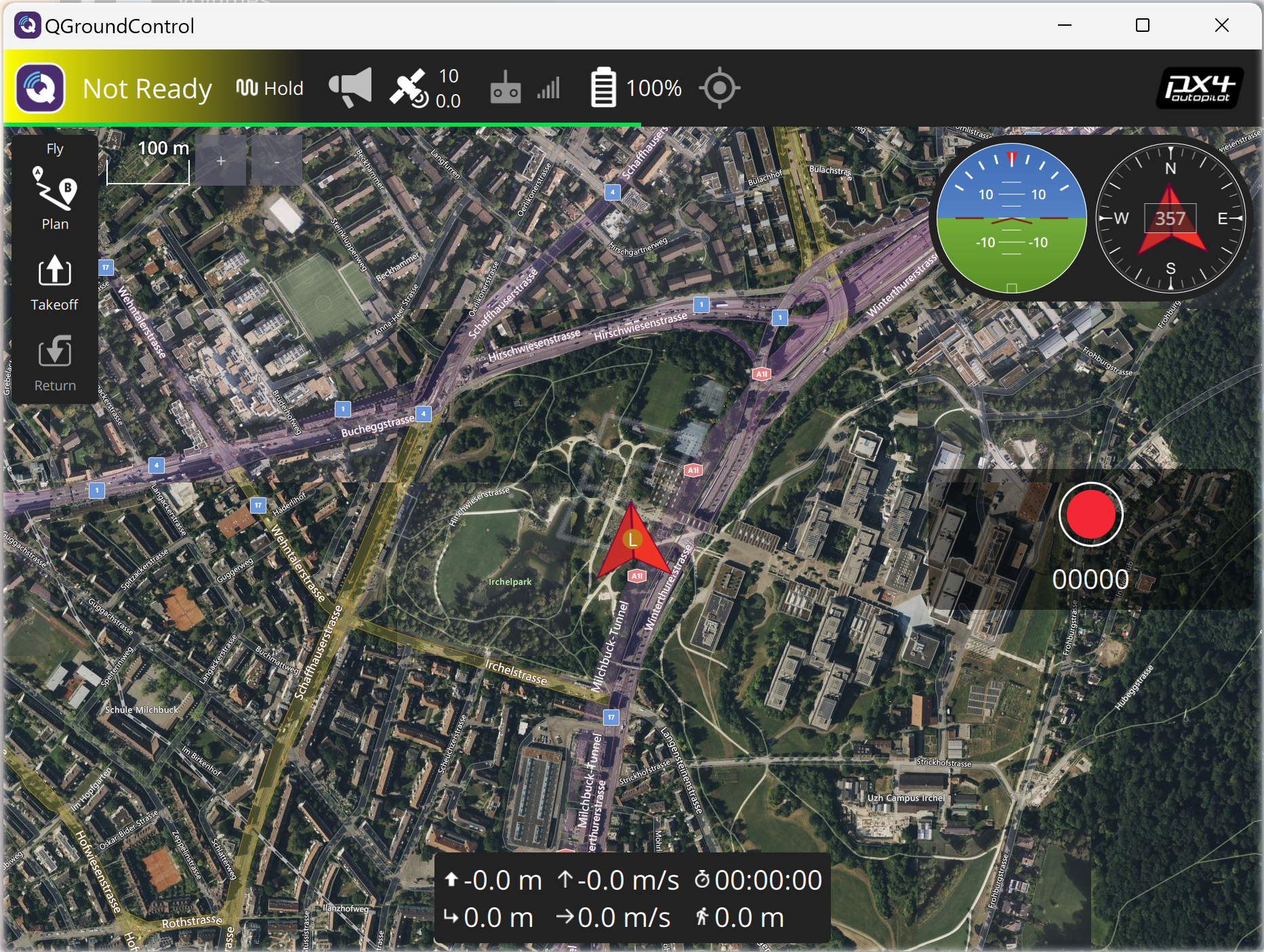

px4.sitl(docker.client, expose = 1L, headless = FALSE)Ground control displays it. See Figure 1. The new pup1 appears automatically within ground control. Next, set Vehicle 1 up for an orbit around the field at Irchel Park, Zürich, Switzerland.

Figure 1: Connecting the first SITL

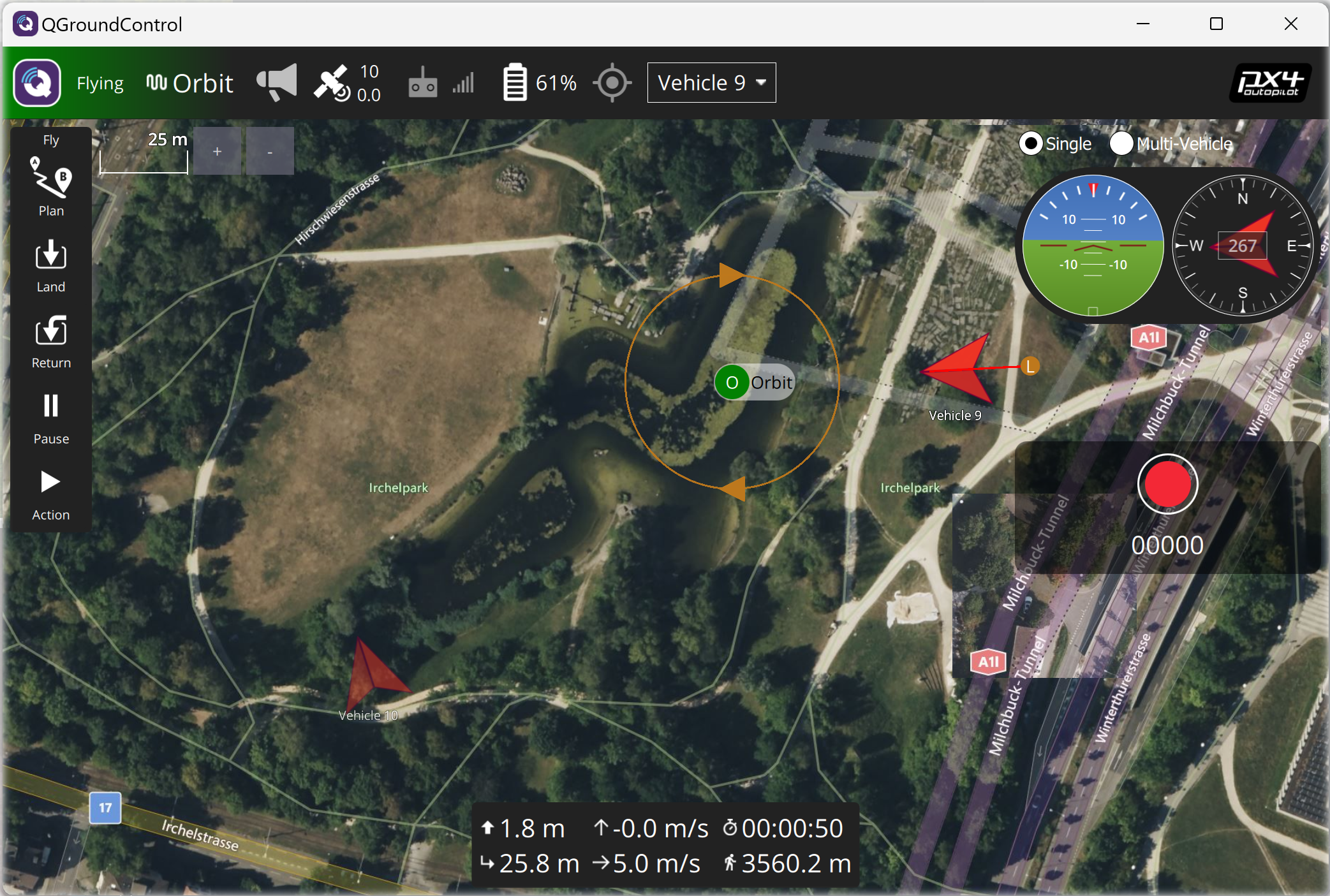

Next, launch another but this time with expose = 9L for simulation instance cardinal . Ground control automatically connects; it finds Vehicle . See Figure 2. The new swarm member receives a complimentary orbit around the swimming lake to the east of the field.

Figure 2: Connecting the second SITL

Conclusions

PX4 Autopilot does not play nicely with containerised simulation—not without some patching. PX4 uses a cardinal “instance” concept in order to differentiate one drone from another on the MAVLink sub-net. The swarm simulation therefore needs to launch an individual simulation with its differentiating instance cardinal.

Nevertheless, containerisation makes a swarm simulation possible without too much effort. The PX4 instances run within their own container with all its dependencies; the MAVLink router runs within its own little Alpine; and ground control runs within Windows natively. Docker acts as a bridging technology, effectively emulating the radio waves and the transceivers used for ground-air inter-meshing.

The container definitions deserve some performance tuning. In SITL mode, PX4 runs flat out as if on a dedicated microcontroller. That works for a system-on-chip but less so when running in a shared host even one with multi-cores. The ‘firmware’ or its scheduler should ideally ‘sleep’ its allocated core so that other parts of the shared container host can breathe. The perfectly emulated solution should throttle down the simulation so that its performance matches some specified target microcontroller operating at a realistic lower clock speed—scope for future development work.

MAVLINK is a surprisingly simple but simultaneously sophisticated publish-and-subscribe protocol over UDP. It lends itself to swarming. Drones can enter and leave the swarm dynamically. Viewed as a flat stream of MAVLink messages, any prospective user interface, diagnostic or artificial intelligence components can ‘tap’ into the stream either passively for monitoring and diagnostics, persistent logging, or more interestingly, actively for mission planning or on-the-fly re-planning, obstacle avoidance, parameter tuning or any other real-time sortie enhancements. The stream is the sortie.

Future Directions

The concept of a hive mind appears quite abstract at first blush. Precisely what is a hive mind? Indeed, what is the mind itself? Although every person experiences their mind every day, all day, researchers still cannot pin down exactly what it is or how it works. Materialist philosophy describes its existence as an epiphenomenon: a description of the mechanistic output of the underlying biology. However, it seems more realistic to propose that the mind phenomena exist on an ‘informational’ plane and that plane is not less real because it transcends the physical medium; information exerts a powerful effect on the physical in ways that the physical medium cannot easily explain, almost as if information sits at the very base of reality rather than at the top.

Getting back to drone swarming, the same informational ‘actors’ concept exists in the world of computer software. The architecture of the ‘hive mind’ can exist as a compound ad-hoc set of software actors tied to the signalling medium, the stream. As a practical deployment, the actors would manifest as a containerised stack of ad-hoc AI and deep-learning services transceiving a uniform time-stamped stream of MAVLink-formatted signals. Technologies already exist to support such a deployment. A GPU-accelerated Docker Compose stack tapping into the ground station portal can deploy ad-hoc actors. The hosts for such an actor stack might comprise one or more laptops or more portable2 headless dedicated compute servers running AI microservices.

A high-performance stream processing backplane such as Redis could act as the streaming medium in order to mediate loss and lag when multiple services operate on the same stream simultaneously. Redis becomes the event source for disparate microservices deployed within their private customised micro-environment. Docker Compose as the container orchestration technology allows for dynamic scaling for micro-services. Operators can scale each service up or down, or even off, according to sortie requirements and available computing power.

As a concrete example: imagine a FIGlet service. The swarm operator can enter some arbitrary text and any available drones in a swarm form up in flight to ‘display’ the text in the sky. If the operator changes the text, the swarm re-forms on the new ‘FIGlet’ arrangement. This sounds ambitious; it is. Still, such a feature is not beyond the hive mind’s capabilities. Its implementation would require multiple overlapping microservices: obstacle avoidance, inter-drone avoidance, in-flight three-dimensional routing planning, and three-dimensional physics simulation—all deployed as containerised microservices interacting with the event-sourced swarm stream and altogether adding up to the hive mind.

Fifield ‘pup’ of Prometheus—Ridley Scott, 2012↩︎

That is, some unit that could be carried by the swarm operator in a backpack—a stack rack.↩︎